Estimating Programmer' Expertise to Augment Online Community Interaction

Background: This project is my first data-oriented research project. The work allowed me to practice basic approaches used in Natural Language Proocessing and Data Mining to analyze programmers' information-seeking behaviors. It also helped me develop a better understanding of how programmers at different levels approached online resources, which was beneficial for me as a programming instructor.

The project got into motion after I completed my course work on information-seeking behavior and was learning data mining techniques through a course. I started discussing with my advisor on the relationship between a person's expertise in a domain and how the person went about searching for information online in the process of problem-solving. I picked programmers as the target of the investigation, as I have some intuition myself as a programmer that could benefit the exploration. I then designed and executed the study to investigate whether beginners and experts differed in terms of the types of online programming resources they visited.

My role(s): Researcher, Developer

Collaborator(s): Dr. Mark S. Ackerman

Motivation: Having an estimate (e.g., in the form of classification such as level 1 to level 5) of expertise of a member within a knowledge-based online community (e.g., StackOverflow or Quora) is useful. For instance, community members can better assess to whom they should ask questions. Obtaining an estimate, however, is difficult as a significant contribution (e.g., asking and answering many questions) is usually required to generate an approximate indication of expertise.

Problem Solving: I hypothesized that a person's browsing history contained signals (i.e., page type such as API document or blog) for one's expertise in a subject area (e.g., programming). I analyzed the data collected from programmers (N=26) at different levels of expertise to verify this hypothesis. I applied support vector machine (SVM) to classify browsing records into different page types and used logic regression to model the strength of signals provided by different page types.

Interview & Data Collection

Crawling Web Pages

Classification

Regression

Data Analysis

Outcomes:

- Detecting novices and experts are plausible: page types can distinguish novices and experts to a certain degree.

- Detecting intermediates is still challenging: further analysis is required to provide estimate on programming expertise for people who are in between (neither a novice nor an expert), namely the intermediates.

The outcomes of this project include several scholarly publications (link), a browser history software for users to review and filter their browsing history from Google Chrome and Firefox, and a system of components that access web resources and categorize them to estimate programmers' expertise.

Summary:

Recruitement website: link

Date: 2013 ~ 2014

Research & Design Strategy

Use Semi-structured Interviews and Questionnaire to Understand Programmers' Levels of Experience

I am a developer myself and also had experience teaching programming courses to adults and graduate students. I am aware how important it is for a developer to know how to find and consult online resources in the process of learning programming or developing applications. As a teacher, one of the very first resources I introduce to my student in the process of learning a new programming language is the API (Application Programmable Interface) document, usually provided by the official website for the programming language (e.g., Python). As a developer myself, I find useful resources online in different formats: blogs, forums, and Q&A communities. These experiences of mine suggest there could be signals of expertise contained in a programmer's browsing history.

My approach to investigate whether browsing history reflects a developer's level of expertise was first to gather evidence of whether developers with different level of expertise will use or look for different types of programming resources. To collect such evidence, I recruited university students who were taking their first programming courses, university students who had prior programming training and project experience, and professional developers from local programming meetup. Each participant was asked to fill out a questionnaire to document their programming experience and training. The data was used by the research team estimate their levels of expertise as the ground truth for evaluating machine estimation results.

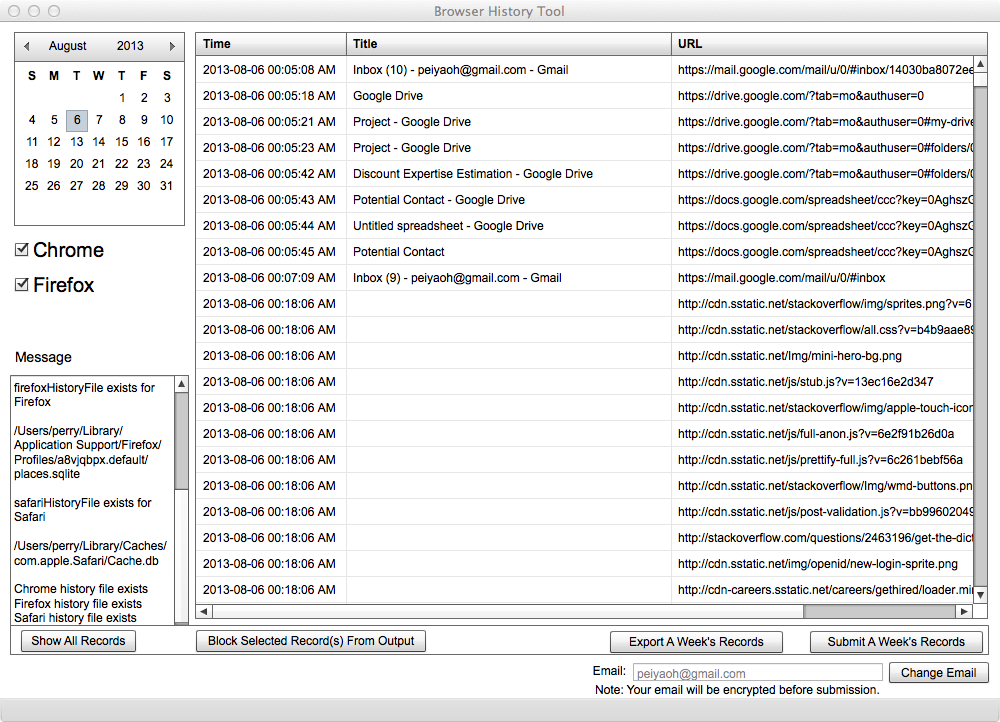

Develop a Browsing History Tools for Data Collection and Apply Critical Incident Analysis through Log Reflection to Understand Programemrs' Information Seeking Behaviors

During the study period, I invited each developer to come into the lab and describe their recent experience (e.g., in the past two days) of doing programming tasks and consult online programming resources accordingly. To support participants during deliberation, I developed a browsing history tool using Adobe Flex that allowed users to extract browsing history from Google Chrome and Mozilla Firefox. The tool allowed participants to display, search, filter, and hide browsing history before uploading their history for analysis.

During in-person meetings, I invited the participant to open the browsing history tool and review the programming resources they visited recently, including how they got to different online programming resources (e.g., direct link or through search engine, which can be distinguished based on whether keywords have been used for searching prior to a page visit). At the end of each week, participants were asked to review and upload their browsing history. Through interviews, I gathered qualitative evidence regarding different occasions when developers would access programming resources. Such observations provide some initial evidence of why developers with varying levels of expertise might visit various resources. For instance, novices might visit API documents by following suggestions from their instructors, while experts might consult Q&As on StackOverflow when they were trying to solve rare and difficult problems.

Train Classifiers of Programming Resources' Types to Characterize Programmer's Browsing History as an Indicators of their Levels of Experience

After obtaining initial evidence, I used programming resource types, namely page types, proposed by prior work and then extracted examples from data (i.e., browsing history) we collected. I manually reviewed and labeled 250 records per page type as training data. I calculated term-frequency and inverse document frequency for each webpage as features and used Linear Support Vector Classification to generate a binary classifier for each page type. The binary classifiers were used to label the rest of the browsing history. With all records labeled with page types, I used the percentage of different page categories as features and used logistic regression to investigate the relationships between these features and a developer's level of expertise.

Outcomes

Our results show that visiting tutorials, libraries, and Q&A pages provided a useful and conservative indication of whether a developer is a novice or an expert, but it is rather difficult to identify people who are intermediate.

For instance, using page visits to tutorial pages as the feature, I created a conservative binary classifier, using 80% as the threshold, that correctly identified novice programmers, with the cost of false negatives.

| Novice Classifier | Ground Truth | Proportion |

|---|---|---|

| Yes | Novice | 4 of 4 |

| No | Novice | 4 of 13 |

On the other hand, using page visits to code libraries and Q&A websites as the feature, I created a conservative binary classifier, using 80% as the threshold, that correctly identified expert programmers, with the cost of false negatives.

| Expert Classifier | Ground Truth | Proportion |

|---|---|---|

| Yes | Expert | 4 of 4 |

| No | Expert | 5 of 13 |

Develop participants recruit website: link.

Develop screening questionnaire: link.

Recruitment webpage: https://sites.google.com/a/umich.edu/sps/

(publication, slides)